How to write and submit a correct robots.txt

A correct robots.txt should live at the root of your site and requires the following things: At least one user agent, allow or disallow rules and a sitemap link.

A correct robots.txt should live at the root of your site and requires the following things: At least one user agent, allow or disallow rules and a sitemap link.

Subscribe

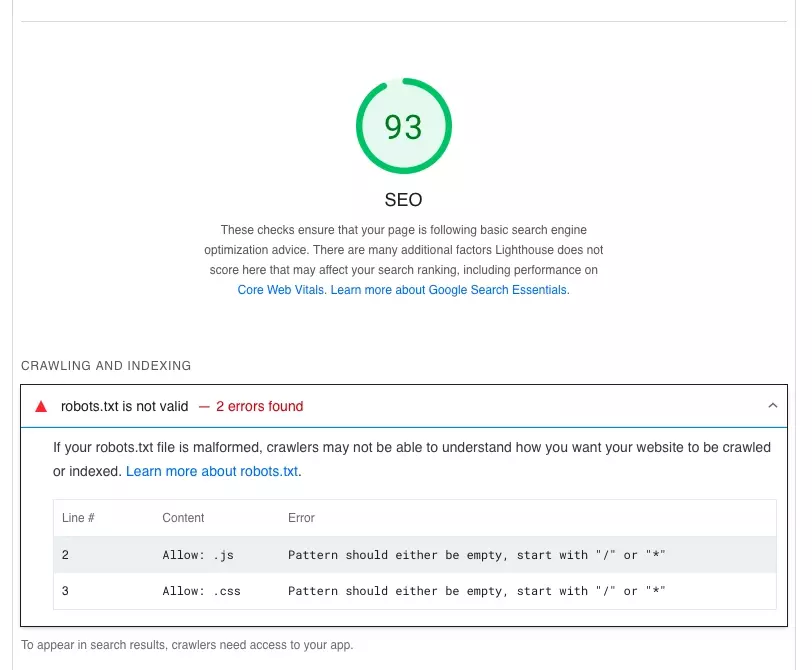

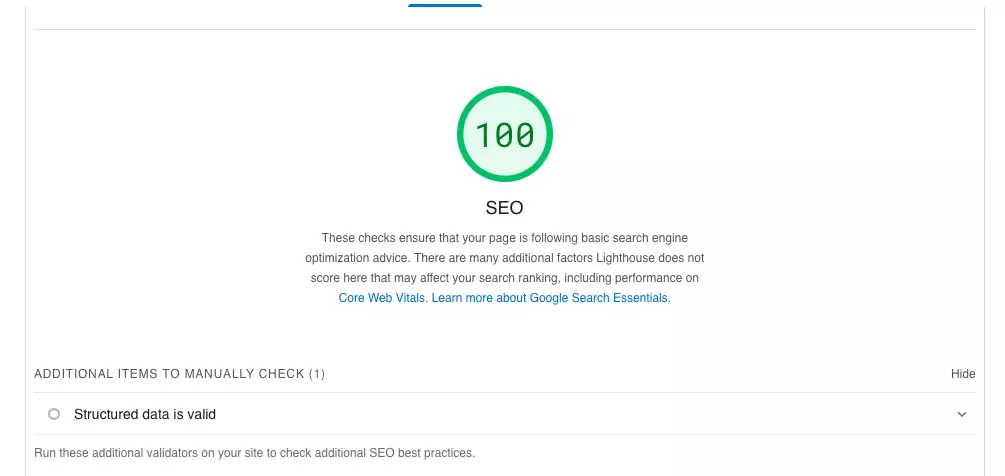

The following article intends to address the right way to write and submit robots.txt files. We are writing this after having submitted an incorrect robots.txt file that damaged our SEO.

Luckily, thanks to the good people at Google, we discovered how to write a correct robots.txt file and resolved our issue.

Writing a Correct Robots.txt

A correct robots.txt file requires four things:

- At least one user-agent

- At least one allow or disallow rule

- A site map

Examples of robots.txt for one or more user-agents can be found in the link below.

Please note that if the robots.txt file does not have the correct sitemap url, your SEO will be heavily damaged.

Submitting a correct robots.txt file

A robots.txt file must live on the root of your site.

If your domain is https://www.example.com then your robots.txt must be found at https://www.example.com/robots.txt.

Looking to learn more about ReactJS, GatsbyJS or SEO ?

Search our blog to find educational content on learning SEO as well as how to use ReactJS and GatsbyJS.