Neural Radiance Fields (NeRFs), Spatial Computing & Augmented Reality @ WWDC2022

A day before WWDC (2022) Adrian Kaehler suggested that Apple would announce Neural Radiance Fields (NeRFs). But, what are they & how could Apple or the world employ them ?

A day before WWDC2022 Adrian Kaehler suggested that Apple would announce Neural Radiance Fields. What are they & how could Apple or the world employ them ?

As our founder was traversing Apple Park as part of his check-in for Apple's Worldwide Developer Conference (June 6th, 2022) he received a notification from LinkedIn about the future of Augmented Reality, Mixed Reality, Robotics and Autonomous Vehicles.

Adrian Kaehler, a pioneer in Spatial Computing and Augmented Reality, sat down with Robert Scoble, a leading Technology Evangelist, where they declared that Apple would announce Neural Radiance Fields (NeRFs) as part of their offering on June 6th during the WWDC 2022 Keynote.

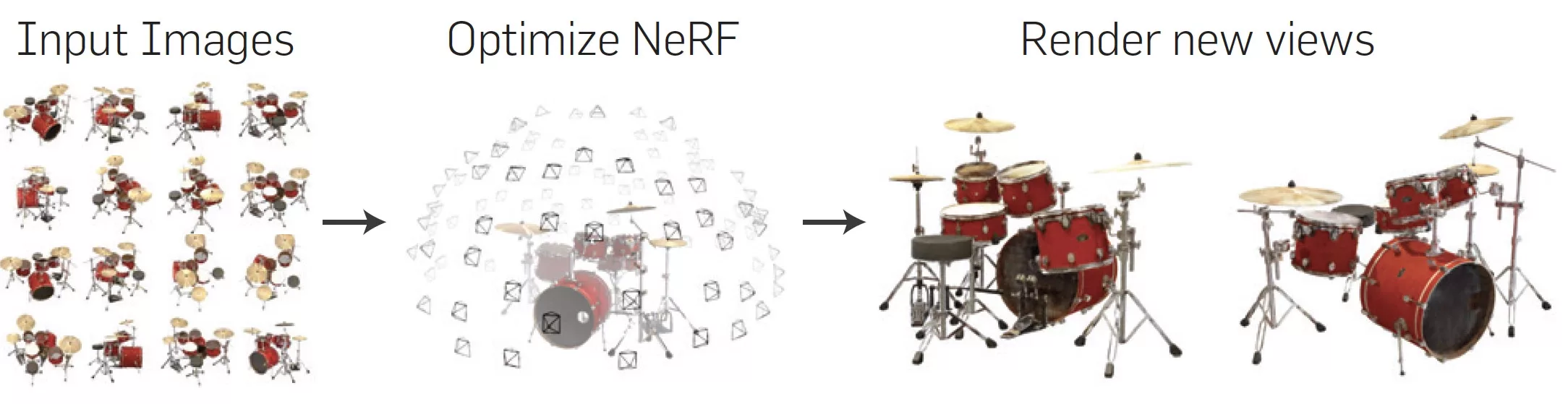

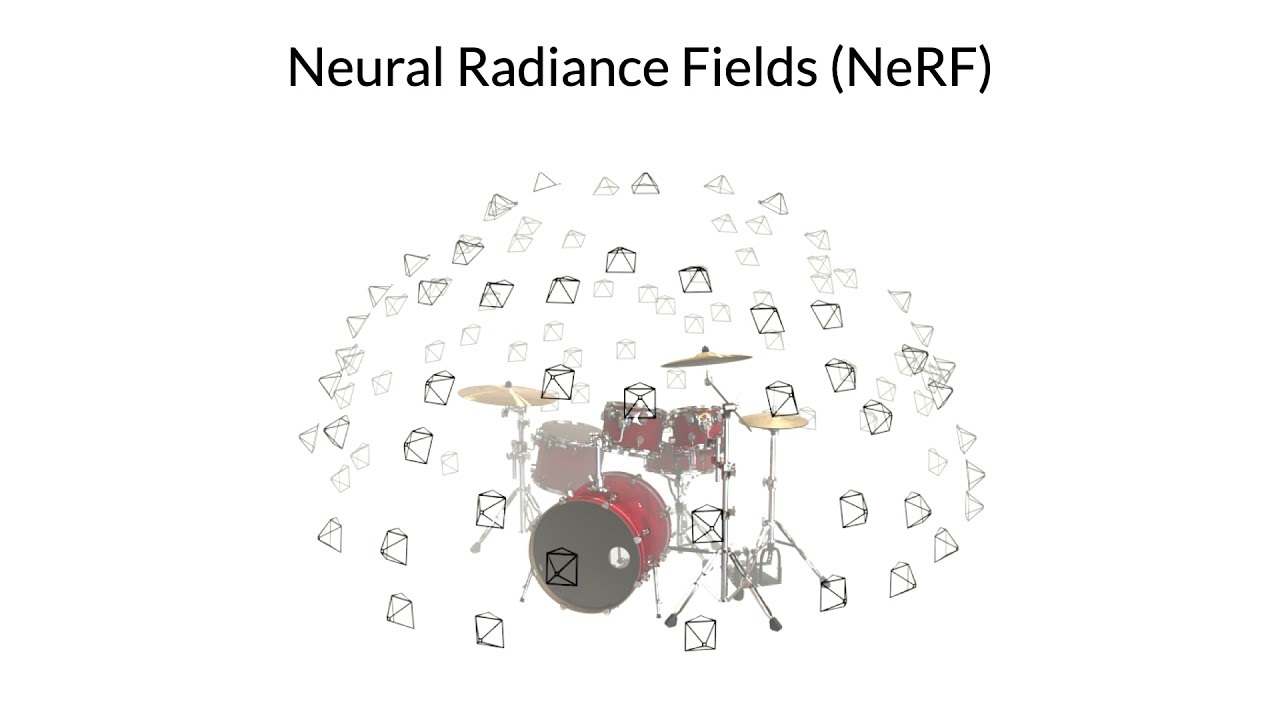

What are Neural Radiance Fields ?

Its an AI technology that take 2D photos or 2D videos of a cheap 2D camera, like your iPhone and converts it into a 3D scene. Which a robot could move around in, or an autonomous car could drive in, or which augmented reality glasses could see.

Robert Scoble

The NeRF itself is a way of representing spatial Information that leverages AI technology, specifically deep neural networks, but what you just listed are things you could do with a NeRF.

The core of a Neural Radiance Field idea is to use a neural network to represent a field of light. As a practical matter it appears that this representation can be extremely compact and efficient, allowing you to build up incrementally a field that represents a huge amounts of space - which might be useful for video games or simulation environments for autonomous cars; or for models of objects which is useful for video games or robotics.

There's a many examples how the Neural Radiance Field technology can change the way we solve a lot of problems that we have solutions for now but that aren't nearly as good.

Adrian Kaehler

According to the literature online, A Neural Radiance Field (NeRF) is a neural network that makes use of 2D images or video to stitch together a 3D environment.

The beauty behind this technology is that it uses Artificial Intelligence (AI) to allow you to view this 3D environment from any point in the scene.

It is considered the latest frontier of Spatial Computing as it allows you to take something in the world and allow people anywhere on planet earth to access it in realtime, from any point of view or anywhere in the scene.

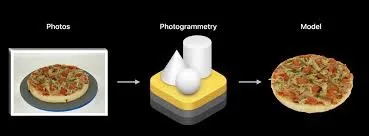

Is Apple already using NeRfs?

Although not explicitly mentioned by Adrian and Robert, we believe that Apple is already using NeRFs to allow you to create 3D models using a LiDAR sensor and a technology that they call Photogrammetry.

This technology is made available to developers via ARKit and RealityKit and have offered it to the general public via their Object Capture.

Where is Apple headed with NeRFs and Spatial Computing ?

Robert and Adrian discuss how Apple's headed to change the way we watch sports and discussed at length how it could allow your to put yourself anywhere on planet earth via a headset.

Having developed virtual reality for the HTC Vive and the Meta Quest using Unity, we do not believe in hour or multi-hour experiences for headsets due to the temporary physiological effects and other health related issues that occur when you spend prolonged periods of time within goggles or headsets.

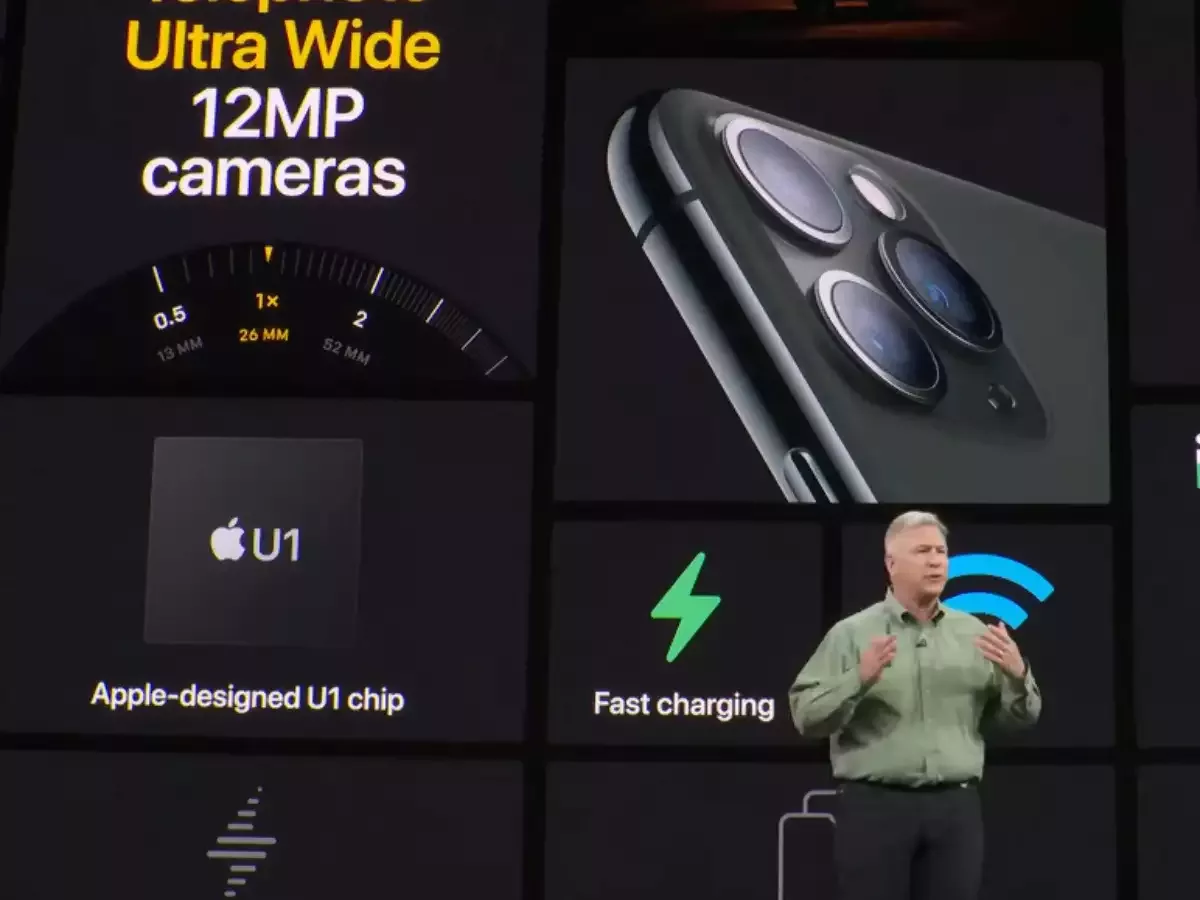

However, thanks to Apple's neural network on its M1 chip, tied to 5G and the Apple U1 chip - which has been hinted to be introduced into Macs & which allows for data transfers of up to 8 megabits per second - Apple is already set to break the frontier of Spatial Computing by allowing you to Pick your Viewing Angle on devices that you already own: your iPhone & iPad, or soon your Mac or Apple TV.

Watch sports from any angle on your AppleTV, Mac, iPhone or iPad

As mentioned above, we believe that the next evolution in Spatial Computing that Apple will announce later today is centered around allowing you to move within a 3D LIVE environment on a 2D screen.

We believe that they will first offer it through their Friday Night Baseball partnership with the MLB but would like to paint a picture of how it could work in other sports such as American Football or Football (Soccer).

Witness Odell Beckham Jr or Karim Benzema as if you were next to them

NeRFs could allow you to have stood behind Karim Benzema as he scored the tie-breaking penalty against Manchester City.

The LA Chargers and Ram's SoFI Stadium and the Las Vegas Raiders Allegiant Stadium have pioneered technological stadiums and are fully equipped with 5G - suggesting that they are ready to be the first to create NeRFs around them - enabling you to watch a game from your home, picking your viewing angle from a better seat than those in the stadium.

We believe that this kind of experiences will break into Football (Soccer) through the Santiago Bernabeu, the home of Real Madrid, that is currently being reconstructed to be the best stadium on planet earth.

Learn more about the authors behind the Twitter stream

Who is Adrian Kaehler ?

Adrian Kaehler is American Scientist, Engineer, Entrepreneur, Inventor, Author who is considered a pioneer & leader in the field of Computer Vision due to his contributions to the OpenCV Library; as well building the first autonomous car at Stanford University as part of the 2005 DARPA Grand Challenge & being fundamental to key parts of the Magic Leaps System in his role as Vice President of Core Software.

Who is Robert Scoble ?

Robert Scoble is an American Blogger, technical evangelist and author best known for his blog, Scobleizer which focuses on Spatial Computing Strategies

Any Questions ?

Please send us a note to inquiries@delasign.com with any thoughts or feedback you may have.