How are Generative User Interfaces created?

Generative UI leverages large language models (LLMs), design systems and dynamic templates to transform intent into an accurate visual outcome.

Generative UI leverages large language models (LLMs), design systems and dynamic templates to transform intent into an accurate visual outcome.

SubscribeWhat are Generative User Interfaces?Please note that since releasing this article, the following events, advancements and updates have occured:

- OpenAI released Structured Outputs (August 6th, 2024).

These updates have been included in the latest version of this article.

In 2024, Generative UI, or GenUI, is considered the future of interfaces and is currently best demonstrated by Brain.ai's Natural AI (video above) or Google Gemini (video below).

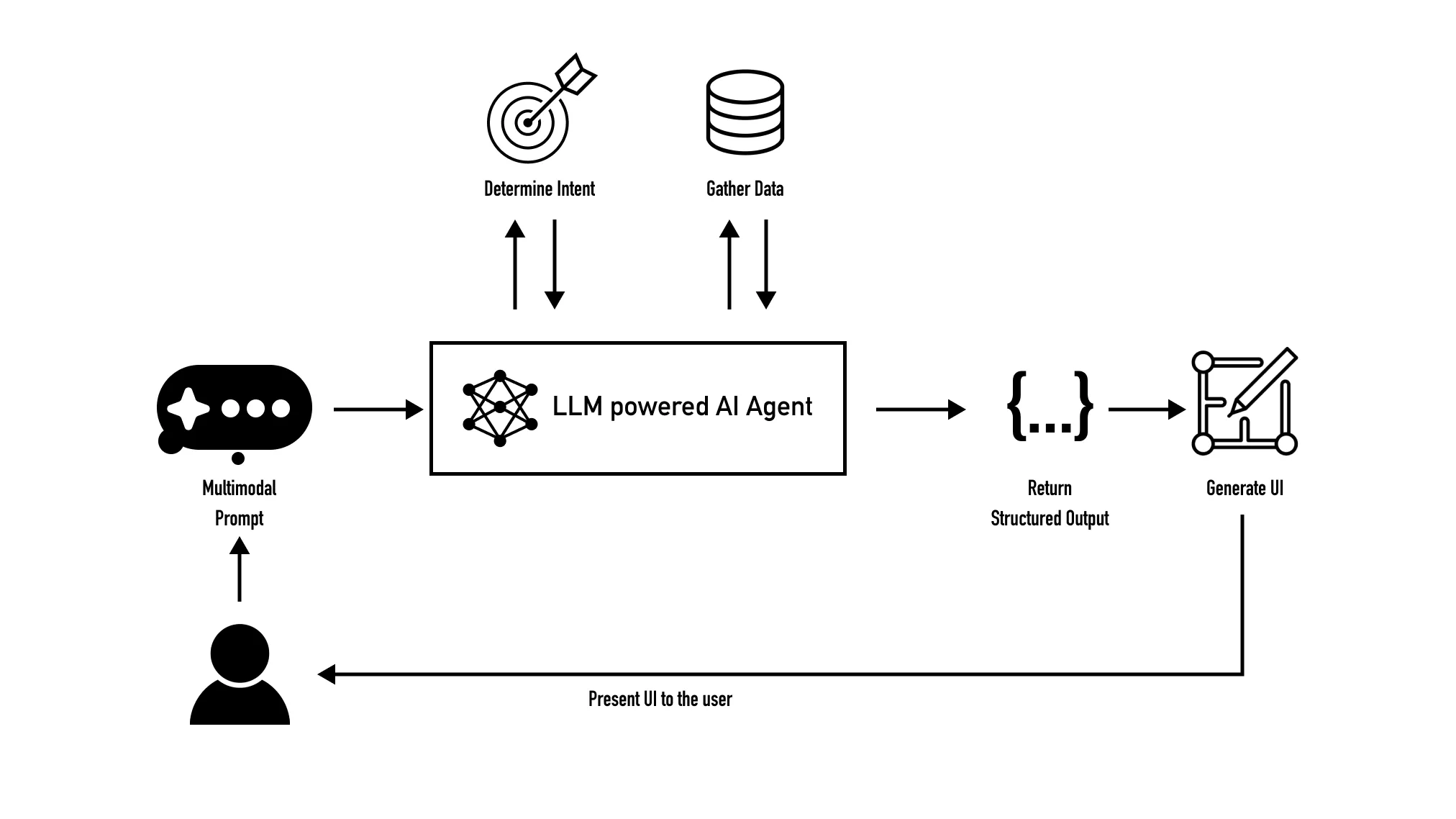

The way that systems (i.e. apps or websites) produce Generative UI is as follows:

A user sends a multimodal prompt to an LLM powered AI agent which determines the users intent and queries a database to gather the required data.

The AI agent then transforms the data gathered from the database into an format, known as a structured output, which can be consumed by a dynamic template, producing a user interface which meets the request of the user.

To share a separate example of how Generative UI could help a business is the following:

Consider a customer searching for sneakers on Nike.com.

- As the customer browses Nike.com, they force press a UI element that represents a sneaker that they are interested in.

- This process activates a microphone function that allows the customer speaks to their smartphone.

- The customer asks Nike.com to find an outfit that matches this shoe.

- The AI agent takes the multimodal intent (i.e. "Find an out fit for this shoe" along with the shoe data) and returns an interface with outfits that the user might like.

Looking to learn more about design and technology?

Search our blog to discover more content and tutorials on design and technology.